In the world of data storage solutions, the speed of data transfer becomes an essential aspect that contributes to the choice of the right system. One of the technologies that improve this acceleration is remote direct memory access (RDMA), and it is a perfect match for the low latencies, high bandwidth, and small CPU footprint. But why so?

In today’s article, we will explain the work of RDMA in a data storage solution step by step:

- Where RDMA comes from

- What is RDMA

- RDMA in Open-E JovianDSS

Where RDMA Comes From

In order to have a better understanding of the value of remote direct memory access, let us start with a short introduction to its history and, to be more precise, with DMA. Direct memory access is such a feature of a system that allows several subsystems to bypass the central processing unit (CPU) and reach the main system memory (random-access memory or RAM) independently. But why is it so essential? Exactly: time-saving. If we take just a single CPU and try to transfer the data, it will end up in a pretty long queue of read and write operations that overwhelm the input and output. Thanks to the DMA, the CPU can initiate the transfer no matter what operation it was working on and continue with the already mentioned queue simultaneously (it does other operations while the transfer is in progress and receives the interruption from DMA only when the transfer is finished).

What is RDMA?

So, RDMA (Remote Direct Memory Access) is a slightly narrower topic, and, to be more precise, it’s an extension of the previously discussed direct memory access. As you might guess from the name of this feature, it can access memory on a remote machine without interrupting the processing of the CPU on that exact system. What is so special about RDMA, and why is it so popular among numerous data storage infrastructures?

Firstly, it supports zero-copy networking, which allows applications to perform data transfers without the involvement of the network software stack. Due to that, the data is not copied between network layers and goes directly to buffers. Secondly, it involves neither kernel nor CPU (the latter comes from the DMA), which eventually leads to a smaller CPU footprint and more free space in the caching devices.

Remote direct memory access requires link-layer networking technologies for its applications’ transfer.

RDMA in Open-E JovianDSS

If we talk about RDMA in Open-E JovianDSS Up29r2, we mean RDMA Over Converged Ethernet, and currently, it supports the connection between the nodes in non-shared storage cluster configuration only (the RDMA connections in shared storage clusters for replicated write log, and in client-server connection will be implemented in one of the upcoming software updates). For our OECE engineers who seek more technical details, it could be worth mentioning that we utilize the iSCSI extensions for remote direct networking (iSER) to enable RDMA connection. This way, the RDMA protocol increases the performance between the nodes by using RoCE (RDMA over Converged Ethernet)-compatible controllers. It enables to supply higher bandwidth for the whole block storage transfers, providing zero time copy behavior. Additionally, it has the lowest CPU utilization and the lowest latency, which are improved with stability and all possible benefits (including high availability, security, etc.) an iSCSI protocol provides. Thanks to such a combination, RoCE turns out to be the fastest solution in storage area network configurations.

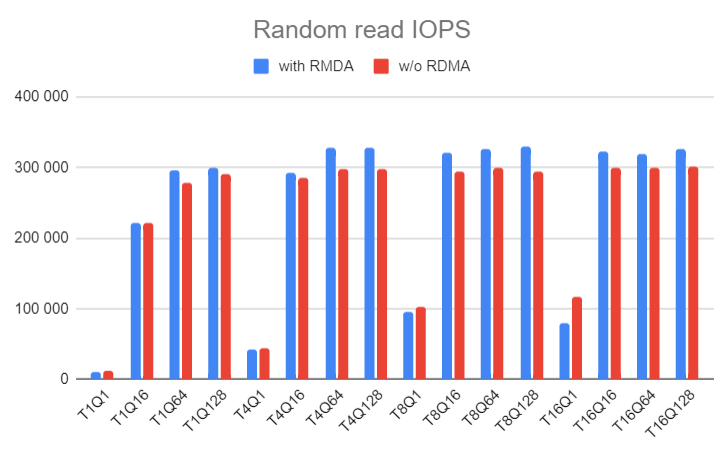

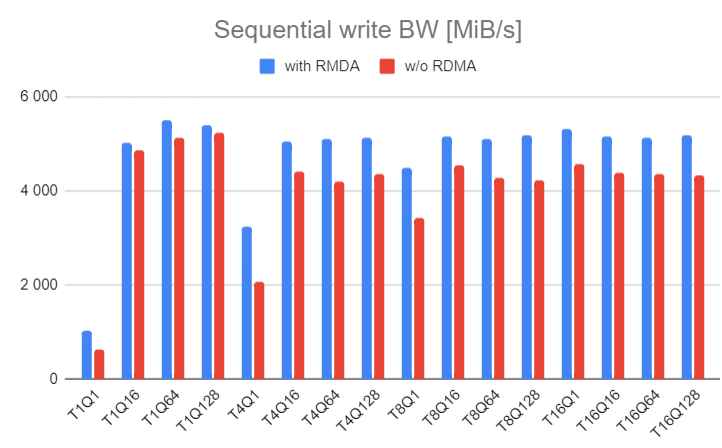

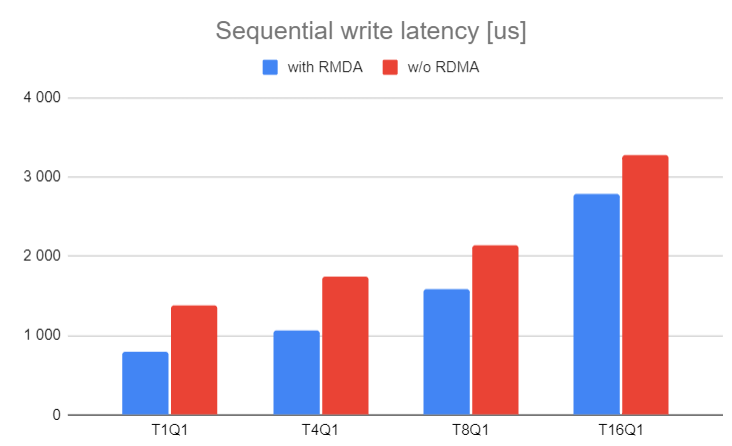

For example, the tests of Open-E JovianDSS RDMA for mirroring path connection dedicated to Mellanox and ATTO 100GbE NICs have shown great results in performance, mainly boosting write workload:

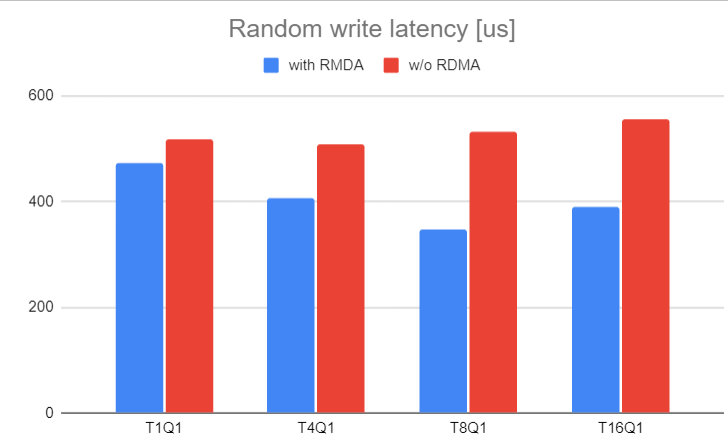

In addition, the tests have illustrated the lower latency of the write performance while using an Open-E JovianDSS-based system with RDMA:

Find more details about what’s new in Open-E JovianDSS up29r2 in the Release Notes >>

The Up29r2 version of Open-E JovianDSS can be downloaded here.