Much like with many things in IT, “provisioning” can mean several things. For instance:

- user provisioning,

- server provisioning,

- service provisioning, and

- network provisioning.

All exist and refer to various aspects of IT infrastructure, so it’s important to clarify what kind of provisioning is going to be discussed here. In this case, we’re referring to the type of provisioning that occurs when we allocate space in data storage virtualization systems, centralized disk storage systems, and storage area networks (SANs). Using

Open-E JovianDSS, it’s possible to initially allocate this space in two different ways, either through thick-provisioning or thin-provisioning. Over-provisioning is also available by choosing the thin-provisioning option.

Data Storage Thick-Provisioning – What is it?

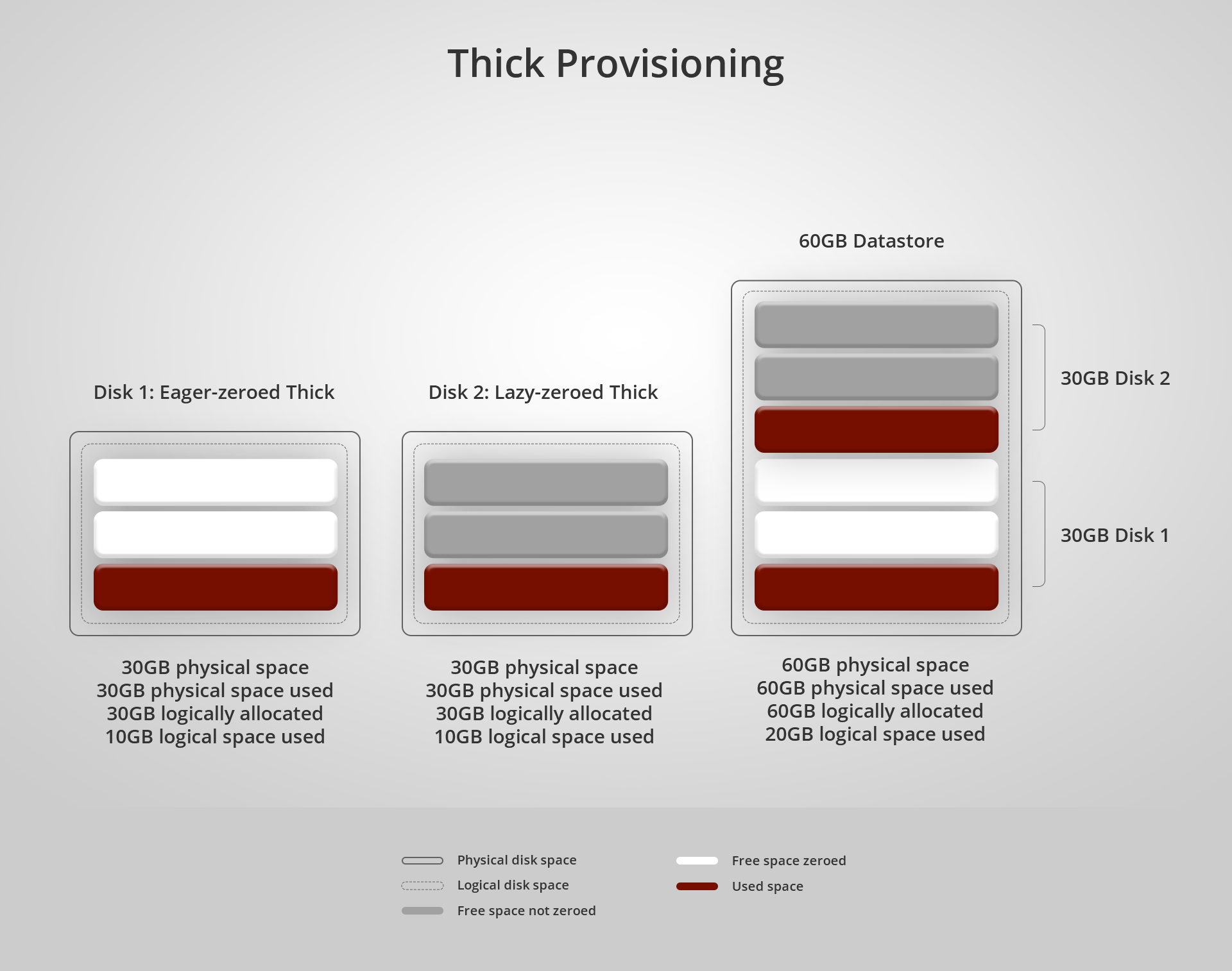

Thick-provisioning, sometimes called “fat provisioning”, is the most conventional way to allocate storage space. When using this method, any time space becomes logically assigned, it also becomes physically blocked off on the disks and, therefore, cannot be used by anything other than the volume it’s allocated to. This method comes in two widely used variants – lazy-zeroed and eager-zeroed thick-provisioning. It’s important to note that Open-E JovianDSS uses the lazy-zeroing approach to thick-provisioning.

Before going any further, let’s clarify what zeroing is. When a system sets something to be deleted, the old data isn’t generally deleted instantly. Instead, it remains in the system and is set to be overwritten. Zeroing something out is the process of changing that old data that’s set to be overwritten into zeroes, which can then be overwritten with new data.

Pros:

There are multiple benefits to using thick-provisioning, some of them true to both variants and some just true to one or the other. Let’s start with the general advantages of thick provisioning.

- No unexpected lack of space – in general, when using thick-provisioning, the main benefit is that there’s no need to worry about possibly running out of space unexpectedly. Once 50GB is set aside for whatever the task may be, there is definitely going to be 50GB there.

- Significantly less oversight needed – in conjunction with the above, once the space is issued, it will be there and everyone knows how much space they have to work with. As such, significantly less oversight is required by administrators for the system to function correctly.

- Less fragmentation and moving parts – there’s significantly less chance of data becoming fragmented, which could, in turn, cause higher latency. This also means that should any data corruption occur, there’s a higher likelihood that the system can be repaired, due to the fact that the space is already allocated sequentially, making both the users’ and administrators’ lives easier.

Cons:

There are also multiple downsides to using thick-provisioning, some associated with a specific variant and others with thick-provisioning as a whole. Let’s take a look at some of those now.

- Wasted space – when allocating with thick-provisioning, most of the time, there’s going to be idle space that just sits there waiting to be overwritten even though it may never be used. This is all space that still costs energy and money to maintain even though it’s not used. Not to mention the upfront costs associated with purchasing disks if there’s not enough space at the beginning of a project.

- Long initial creation process – because the eager-zeroed variant needs to zero out all the data first, this process is significantly longer than the creation of either a thin-provisioned disk or a lazy-zeroed thick-provisioned disk.

- Slower write speed – lazy-zeroed thick-provisioned disks suffer from the fact that their write speeds are significantly slower than their eager-zeroed counterparts and similar to that of thin-provisioned disks. Although, as noted before, this downside is present only at the beginning, and the speed averages out to almost the same in either version once the zeroing in lazy-zeroed systems finishes.

Recommendation:

Suppose you own a massive enterprise with a budget to match who’s reliant on getting the most performance out of every part of your IT system. In this case, it may be worth using eager-zeroed thick-provisioned disks for faster write performance and assurances that the storage won’t go mismanaged. If you’re more dependent on being able to create disks quickly and don’t mind the initially slower write speed while still wanting the security of knowing that the storage space will be there when you need it, lazy-zeroed thick-provisioned disks will work just fine for your purposes. Thick-provisioning is not recommended for the budget-conscious or those looking for storage space efficiency due to the lack of efficient ways to manage space or power consumption, which in turn raises costs.

Data Storage Thin-Provisioning – What is it?

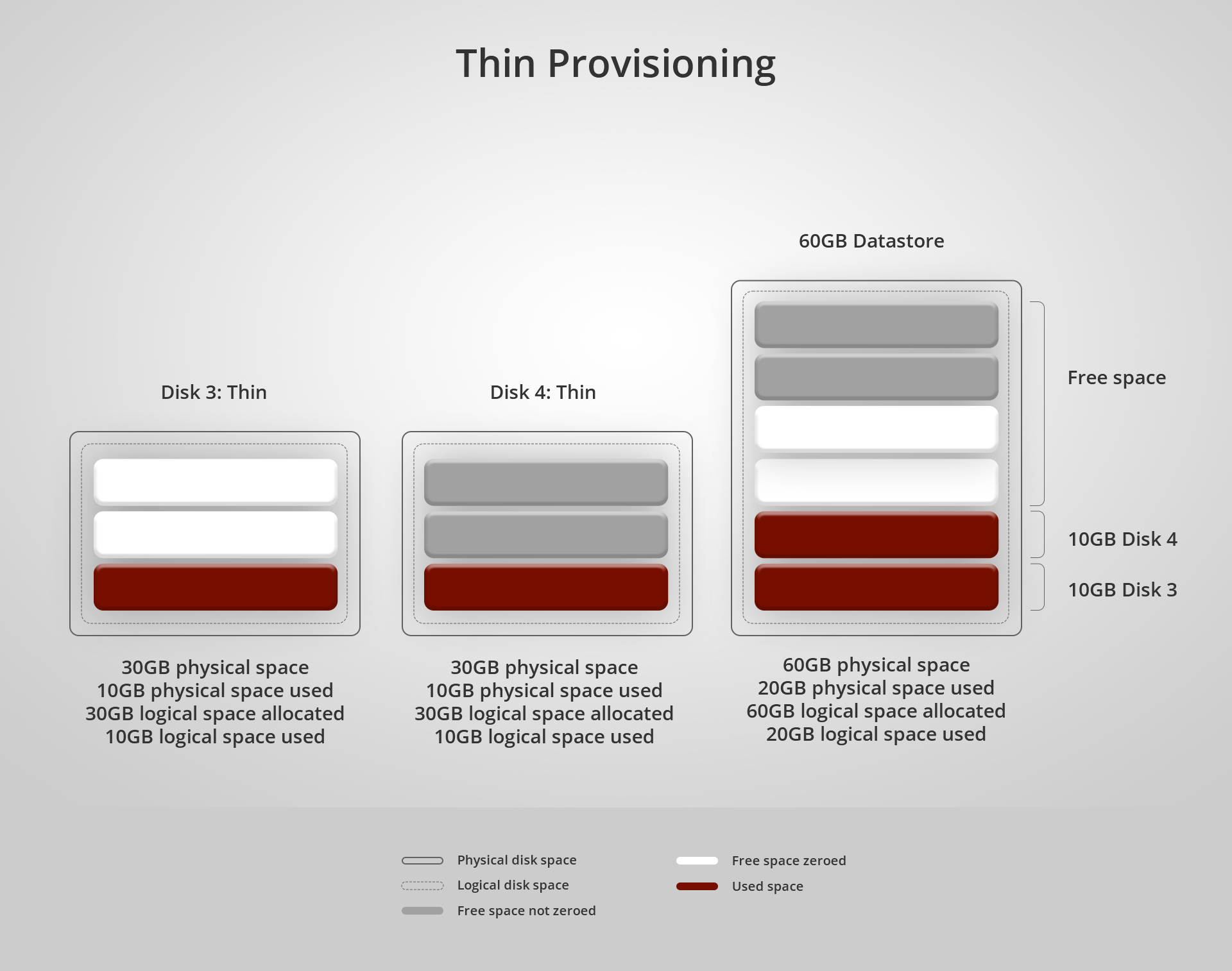

Thin-provisioning was the tech community’s response to the wasted space problem caused by thick-provisioning. In essence, what thin-provisioning does is to allow for the allocation of logical space without actually reserving the said space physically until it’s actually used. So if an IT administrator wanted to allocate 50GB of data for a user, they could. If that user then only used 10GB of that data, then only that 10GB would be reserved physically. The rest could still be utilized by somebody else despite the original user having 50GB reserved logically. In this way, there’d be a lot less wasted space.

As mentioned before, this is done by assigning the space logically but not actually reserving it physically until something is written. In this way, you could actually create as many volumes as you’d like on a system, and they’d all function until the physical cap is met. So, for instance, an administrator could create one hundred volumes of 100GB despite only having a total of 100GB of physical storage, and each of those volumes could be used until the aggregate total reached 100GB. With thick-provisioning, if you only had 100GB of physical storage and you created a volume for 100GB, then you wouldn’t be able to create another volume because all of that space would have been reserved both logically and physically whether it was used or not.

Pros:

- Space management – as mentioned above, the main positive of using thin-provisioning is the lack of wasted space that would typically be present in any thick-provisioned volume.

- Most Cost-Efficient Solution – this lack of wasted space is great in terms of both cost as well as power efficiency and really starts to add up quite a bit as more and more volumes are created.

Cons:

- Oversight and Competence required – due to thin-provisioned space not being blocked off like thick-provisioned space, administrators need to carefully monitor and manage the space to ensure that it doesn’t run out. End-users may face situations where they have logical space to save files but can’t do so. This could be problematic if the physical space is already occupied by another volume and the administrators fail to add more physical space in time. Which, in turn, could have a negative knock-on effect on the company’s technological capabilities and the IT team in general.

- Maintenance and defragmentation – because of the way thin-provisioned volumes work – allocates more space whenever needed – they can occasionally suffer from fragmentation and other issues that thick-provisioned disks don’t suffer from. It also has more of an impact on how administrators use their time due to them having to monitor their system and add more space in real-time as opposed to preallocating it all at the beginning.

- Lack of elasticity – with thin-provisioning, there are a lot of opportunities to save space. That being said, it should be noted that in some implementations of thin-provisioning, it’s not possible to release physical space once allocated. However, ZFS is certainly not one of those systems and does actually allow you to regain this allocated space, so this isn’t as much of an issue. Knowing which systems support reclaim/trim and which do not is very important for administrators.

- More training required – administrators might need more training due to the extra monitoring required, allocation being done on-the-fly instead of being preallocated, and potentially challenging defragmentation processes that are required in order for thin-provisioned disks to function properly. It should be noted that ZFS-based software in particular, is actually quite good at preventing fragmentation, and, as such, this can be mitigated to a large degree by choosing systems that account for this fault. Open-E JovianDSS is excellent at this as it automates some of these challenges and ensures that the engineers have the monitoring capabilities to be fully aware of the system’s status at all times.

Recommendation:

Thin-provisioning is absolutely fantastic for any organization looking to save money on their storage needs, bar one caveat. That caveat is, any organization that wants to use thin-provisioning needs to first ensure that they have people who can handle the responsibility that comes with managing and monitoring the thin-provisioned disks. If those individuals are already employed with the organization or the organization is willing to spend a bit more upfront to employ those people, then using thin-provisioned disks is a great way to save money on both your electricity and IT bills. This really adds up in larger organizations that could potentially have hundreds or thousands of active volumes that would otherwise all have to waste quite a bit of storage space using thick-provisioned disks.

See the difference between thin and over-provisioning by watching the video:

Data Storage Over-Provisioning – What is it?

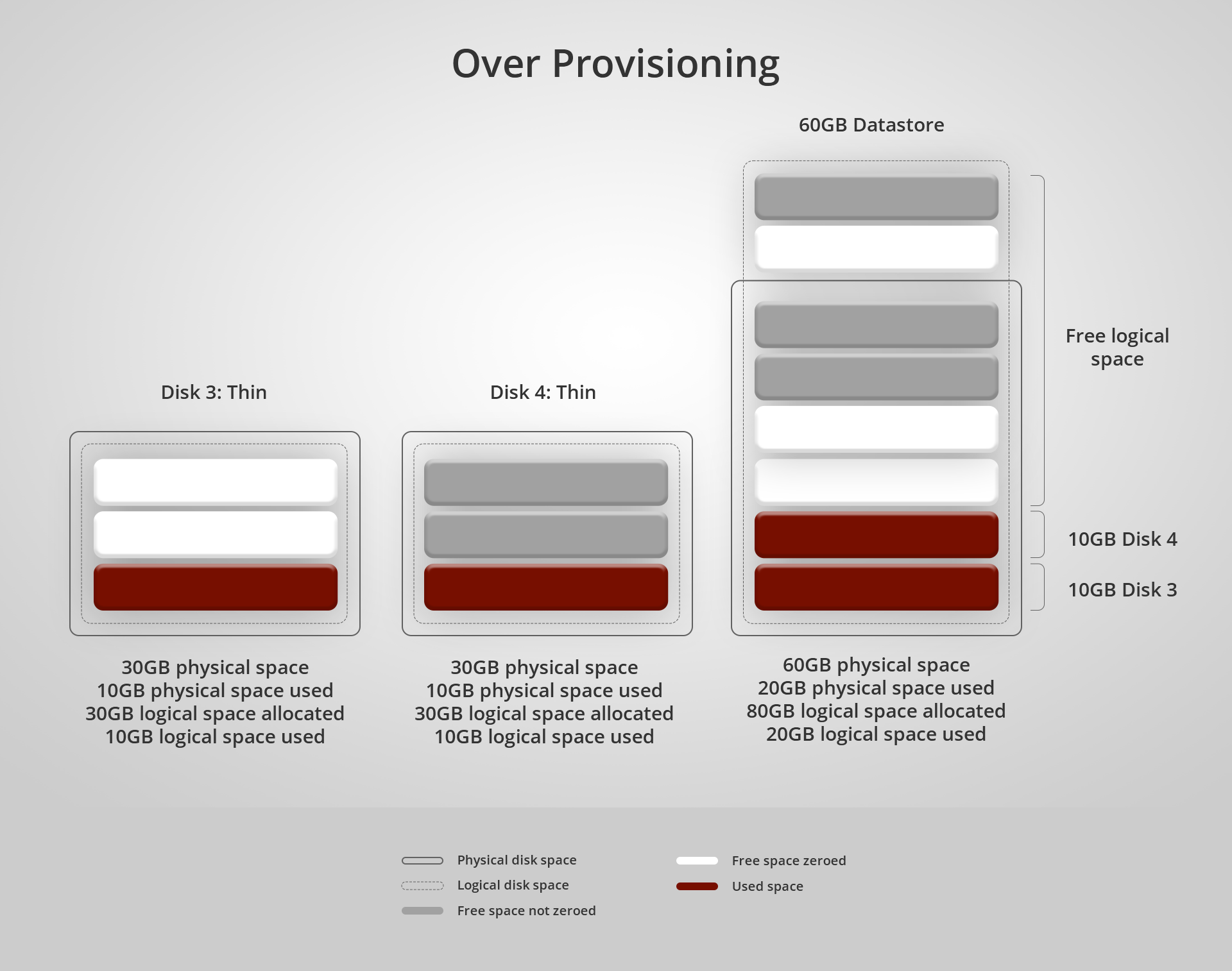

Over-provisioning, also sometimes referred to as over-allocation, is a feature of some thin-provisioning implementations that actually makes it possible to assign more space to a virtual disk than what is actually physically available. For instance, giving a user 50GB of storage space while only having physical disks that equal 25GB of space that’s what over-provisioning refers to in this case.

Pros:

- Able to go beyond the physical limits of a setup – this means that whether there’s a sudden, unexpected need for more storage or the organization wants to start a new application and are just not sure how much storage they need, they can allocate a very large amount of space and they’ll never be caught out with having less storage than they need. It’s most often used because the filesystem that will be residing on the volume has no option to resize.

- Lower upfront costs – the fact that the logical limits are greater than the physical limits allows administrators to set up systems without the requisite physical disks already being purchased, meaning that they lower the initial outlay on a project. This also helps with lowering the initial costs of projects and also gives the user the option to start a new project without actually having all the hardware already on site, great for those times when hardware shortages occur or when you’d like to finance a project bit by bit instead of all at once.

Cons:

- Reliance on over-provisioning. – Any time an organization has run out of storage and is relying on over-provisioning is a very risky time as anything that goes wrong could potentially spiral out of control rather quickly. In regards to why this is risky, it’s simply because without the proper physical equipment, the system could fail. This is compounded by the fact that when exactly they’ll be able to get the physical equipment is normally out of the control of most businesses. As such, they are subject to market demand, delays, irregular government regulations, and a wide variety of other factors that can be really hard to properly account for. This greatly increases the risk involved.

- Builds bad habits. – Over-provisioning allows administrators to not worry about physical space since they can create many large volumes without the physical space limitations they’d typically find in thick-provisioning. As such, this could encourage bad habits and negligence due to the safety net of having the extra logical space available.

- Even more training required. – Special training might be required for administrators to learn how to properly maintain and defragment the system. On top of this, there might be even more training required to show administrators how to properly handle the system once it’s run out of physical space and for them to become skilled in creating proper plans as to what to do should that ever happen. As mentioned before, this downside can largely be mitigated by using systems designed with ease in mind like Open-E JovianDSS. With the proper monitoring and automation tools, the training required is significantly decreased.

Recommendation:

Using the over-provisioning feature of thin-provisioning is recommended for any company or enterprise that has highly experienced staff to ensure that the entire storage system won’t collapse if they’re ever caught being dependent on it. If that staff is there or the company is willing to invest in acquiring that staff, then over-provisioning can allow a company to benefit from the savings of having thin-provisioned volumes while also giving them the flexibility to handle any sudden spikes in storage requirements. It’s quite amazing if you can keep it all under control and in order. Alternatively, the company could choose to invest in solutions that mitigate this problem. This is actually one of the reasons why Open-E JovianDSS is so amazing, as it does a lot of this work for companies. It also provides the tools needed to properly monitor the system as well as actively sending appropriate warnings and events to administrators, giving them more room for error.

The Wrap Up

This concludes our brief guide on provisioning. Now it’s your turn. What do you think, is thick-provisioning better than thin-provisioning? Is there actually a difference in performance between eager-zeroed or lazy-zeroed thick provisioned disks? Is over-provisioning a trap? Let us know in the comments below!